Over time, as demand for rapid testing and deployment of applications grew coupled with faster business cycles, organizations were compelled to innovate in order to keep up with the fast-paced business environment.

The quest to modernize applications and build new ones to create agile workflows led to the concept of using containers. Containerization technology is nearly as old as virtualization. However, containers didn’t ignite much excitement until Docker exploded into the scene in 2013 and evoked a frenzied interest among developers and other IT professionals.

Currently, all the giant tech entities such as Google, Amazon, Microsoft, and Red Hat to mention a few have jumped on the bandwagon.

Why Containers?

One of the challenges developers faced is the difference in computing environments in every stage of software development. Issues arise when the software environment is different from one stage to the next.

For example, an application can run seamlessly on a testing environment using running Python 3.6. However, the application behaves weirdly, returns some errors or crashes altogether when ported to a production environment running Python 3.9.

Containers came to the scene to address this challenge and ensure that applications run reliably when moved from one computing environment to the next in every stage of software development – from the developers’ PC all the way to the production environment. And it’s not just the software environment that can bring about such inconsistencies, but also the differences in network topology and security policies.

What are Containers?

A container is an isolated software unit that packs all the binary code, libraries, executables, dependencies, and configuration files into a single package in such a way that the application will run smoothly when ported from one computing environment to another. It doesn’t come with an operating system image which makes it lightweight and easily portable.

A container image is a standalone, lightweight, and executable package that bundles everything required to execute the application. At runtime, a container image transforms into a container. In the case of Docker, for example, a Docker image becomes a docker container when executed on Docker Engine. Docker is a runtime environment used for building containerized applications.

Containers run in complete isolation from the underlying operating system, and containerized applications will always run consistently regardless of the computing environment or infrastructure. It’s for this reason that a developer can develop an application from the comfort of this laptop and readily deploy it on a server.

The consistency and reliability of running containers give developers peace of mind in knowing that their applications will run as expected no matter where they are deployed.

How are Containers Different from Virtual Machines?

A common thing that containers and virtual machines share is that they operate in a virtualized environment. Containerization, in a sense, is a form of virtualized technology. However, containers differ from virtual machines in more ways than one.

Virtual Machines

A virtual machine also referred to as a virtual instance or VM in short is an emulation of a physical server or PC. Virtualization is a technology that makes it possible to create virtual machines. The concept of virtualization dates back to the early 1970s and laid the foundation for the first generation of cloud technology.

In virtualization, an abstraction layer is created on top of a bare-metal server or computer hardware. This makes it possible for the hardware resources of a single server to be shared across multiple virtual machines.

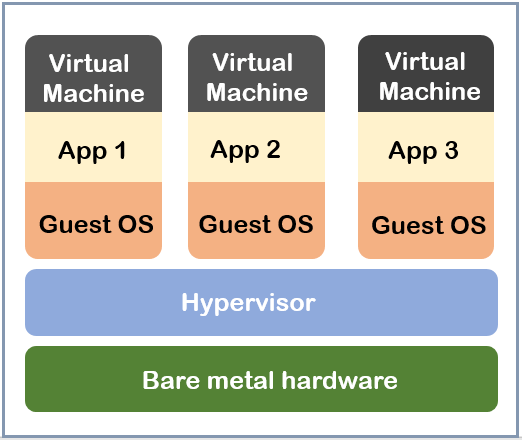

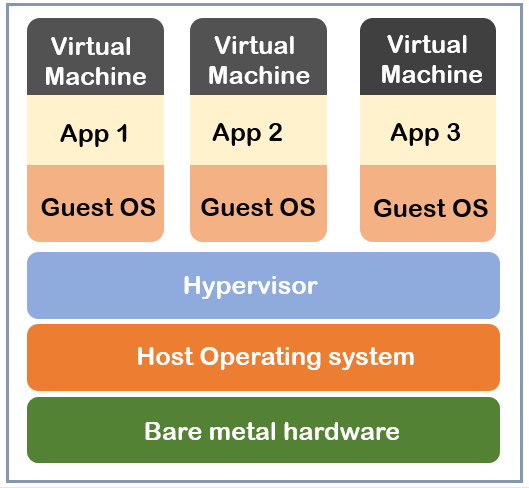

The software used to make the abstraction layer is referred to as a hypervisor. The hypervisor abstracts the virtual machine and the guest OS from the actual bare metal or computer hardware. Thus, a virtual machine sits on top of the hypervisor which makes the hardware resources available thanks to the abstraction layer.

Virtual machines run a complete operating system (guest OS) which is independent of the underlying operating system ( host OS ) on which the hypervisor is installed. The guest OS then provides a platform to build, test and deploy applications alongside their libraries and binaries.

[ You might also like: How to Install KVM on CentOS/RHEL 8 ]

There are two types of Hypervisors:

Type 1 Hypervisor (Bare Metal Hypervisor)

This hypervisor is installed directly on a physical server or the underlying hardware. There’s no operating system that sits between the hypervisor and the computer hardware, hence the tag name bare-metal hypervisor. It provides excellent support since resources are not shared with the host operating system.

Because of their efficiency, Type 1 hypervisors are mostly used in enterprise environments. Type 1 hypervisor vendors include VMware Esxi and KVM.

Type 2 Hypervisor:

This is also regarded as a hosted hypervisor. It is installed on top of the host operating system and shares the underlying hardware resources with the host OS.

Type 2 hypervisors are ideal for small computing environments and are mostly used for testing operating systems and research. Type 2 hypervisor vendors include Oracle VirtualBox and VMware Workstation Pro.

The Drawback with Virtual Machines

Virtual machines tend to be huge in size ( Can take up several GBs ), slow to start and stop and gobble up a lot of system resources leading to hang-ups and slow performance due to limited resources. As such, a virtual machine is considered bulky and is associated with high overhead costs.

Containers

Unlike a virtual machine, a container does not require a hypervisor. A container sits atop a physical server and its operating system and shares the same kernel as the OS among other things such as libraries and binaries. Multiple containers can run on the same system, each running its own set of applications and processes from the rest. Popular container platforms include Docker and Podman.

Unlike virtual machines, containers run in complete isolation from the underlying operating system. Containers are exceptionally lightweight – just a few Megabytes – take up less space, and are resource-friendly. They are easy to start and stop and can handle more applications than a virtual machine.

Benefits of Using Containers

Containers provide a convenient way of designing, testing, and deploying applications from your PC right to a production environment, be it on the premise or cloud. Here are some of the benefits of using containerized applications.

1. Greater Modularity

Before containers, we had the old-fashioned monolithic model where a whole application comprising of both frontend and backend components would be bundled into a single package. Containers make it possible to split an application into multiple individual components that can communicate with each other.

This way, development teams can collaborate on various parts of an application provided no major modifications are made with regard to how the applications interact with each other.

This is what the concept of microservices is based on.

2. Increased Productivity

More modularity means more productivity since developers are able to work on individual components of the application and debug errors much faster than before.

3. Reduced Overhead Costs

In comparison to virtual machines and other conventional computing environments, containers use fewer system resources since they do not include an operating system. This averts needless expenditure on procuring expensive servers to build and test applications.

4. Increased Portability

Due to their small footprint, containerized applications are easily be deployed to multiple computing environments / operating systems.

5. Greater Efficiency & Flexibility

Containers allow for rapid deployment and scaling of applications. They also provide the much-needed flexibility to deploy applications in multiple software environments.

How do Containers Benefit DevOps Teams?

Containers play a key role in DevOps and it would be impossible to imagine how the situation would be without containerized applications. So, what do containers bring to the table?

First, containers underpin the microservices architecture, allowing the building blocks of an entire application to be developed, deployed, and scaled independently. As mentioned, this makes for greater collaboration and rapid deployment of applications.

Containerization also plays a major role in facilitating the CI/CD pipelines by providing a controlled and consistent environment for building applications. All the libraries and dependencies are packaged along with the code into one single unit for faster and easier deployment. The application tested will be the exact software that will be deployed in production.

In addition, containers enhance the rollout of patches and updates when an application is split into multiple microservices., each in a separate container. Individual containers can be examined, patched, and restarted without interrupting the rest of the application.

Conclusion

Any organization seeking to attain maturity in DevOps should consider leveraging the power of containers for agile and seamless deployments. The challenge lies in knowing how to configure, secure, and seamlessly deploy them to multiple environments.

Hi,

Thanks a lot.

“A container sits atop a physical server and its operating system and shares the same kernel as the OS among other things such as libraries and binaries. ”

“containers run in complete isolation from the underlying operating system.”

Don’t these two statements contradict each other?

Either a container shares everything with the O/S or it is completely isolated from the O/S. You can’t have it both ways.

The entire post reads like a marketing spiel by a container provider. Very one-sided.