Like any other operating system, GNU/Linux has implemented memory management efficiently and even more than that. However, if any process is eating away your memory and you want to clear it, Linux provides a way to flush or clear the RAM cache.

In this article, we will explore how to clear RAM memory cache, buffer, and swap space on a Linux system to enhance overall performance.

Understanding RAM Memory Cache, Buffer, and Swap Space

Let’s explore RAM memory cache, buffer, and swap space on a Linux system.

RAM Memory Cache

The RAM memory cache is a mechanism used by the kernel to keep regularly accessed data. While this boosts system responsiveness, an overloaded cache can lead to the retention of obsolete data, affecting performance.

Buffer

Like cache, the buffer holds data temporarily but differs in its purpose. Buffers store data being transferred between components like the CPU and hard disk, facilitating smooth communication. However, an excess of buffered data can hinder system speed.

Swap Space

Swap space is an allocated area on the hard disk that acts as virtual memory when physical RAM is exhausted. While it prevents system crashes due to low memory, it can slow down the system if overused.

Clearing Cache, Buffer, and Swap Space in Linux

In certain situations, you may need to clear the cache, buffer, or swap space as explained below.

How to Clear RAM Memory Cache in Linux?

Every Linux system has three options to clear the cache without interrupting any processes or services.

Clearing PageCache

1. To clear the PageCache only, you can use the following command, which will specifically clear the PageCache, helping to free up memory resources.

sudo sync; echo 1 > /proc/sys/vm/drop_caches

Clearing Dentries and Inodes

2. To clear the dentries and inodes only, you can use the following command, which will sync the filesystem and clear both dentries and inodes, improving system performance by releasing cached directory and inode information.

sudo sync; echo 2 > /proc/sys/vm/drop_caches

Clearing PageCache, Dentries, and Inodes

3. To clear the pagecache, dentries, and inodes, you can use the following command, which will sync the filesystem and clear the pagecache, dentries, and inodes, helping to free up memory and improve system performance.

sudo sync; echo 3 > /proc/sys/vm/drop_caches

Here’s an explanation of each part of the above command:

- The

sudois used to execute the command as a superuser. - The

syncwill flush the file system buffer. - The

“;”semicolon is used to separate multiple commands on a single line. - The

echo 3 > /proc/sys/vm/drop_cachescommand is used to drop the page cache, a temporary storage area for recently accessed files.

Note: The drop_caches file controls which type of cached data should be cleared and the values are as follows:

1– Clears only the page cache.2– Clears dentries and inodes.3– Clears page cache, dentries, and inodes.

As mentioned in the kernel documentation, writing to drop_caches will clean the cache without killing any application/service, command echo is doing the job of writing to the file.

If you have to clear the disk cache, the first command is safest in enterprise and production as “...echo 1 > ….” will clear the PageCache only.

It is not recommended to use the third option above “...echo 3 >” in production until you know what you are doing, as it will clear pagecache, dentries, and inodes.

How to Clear Swap Space in Linux?

To clear swap space, you can use the swapoff command with the -a option, which will disable all swap partitions.

sudo swapoff -a

Then, turn it back by running the following command, which will activate all swap partitions.

sudo swapon -a

Is It Advisable to Free Buffer, Cache, and Swap in Linux?

In general, it is not a good idea to manually free up Buffer and Cache in Linux that might be used by the Linux kernel, which is designed to manage these resources efficiently, and manually clearing them can disrupt system performance.

However, there may be rare situations where you need to clear the Buffer and Cache, such as if you are experiencing severe memory pressure and cannot free up memory by other means. In these cases, you should proceed with caution and be aware of the potential performance impact.

Similarly, clearing swap space in Linux is generally not a routine or advisable practice under normal circumstances.

Automating Memory Optimization

To automate the process and regularly clear memory, you can set up a cron job to run the commands at specified intervals.

Open the crontab configuration.

crontab -e

Add the following lines to clear cache, buffer, and swap space daily at midnight:

0 0 * * * sudo sync; echo 3 > /proc/sys/vm/drop_caches 0 0 * * * sudo echo 1 > /proc/sys/vm/drop_caches 0 0 * * * sudo sync; echo 2 > /proc/sys/vm/drop_caches 0 0 * * * sudo swapoff -a && sudo swapon -a

For additional information on scheduling a job with cron, you may want to refer to our article titled ‘11 Cron Scheduling Jobs‘.

Is It Advisable to Clear RAM Cache on a Linux Production Server?

No, it is not. Consider a scenario where you have scheduled a script to clear the RAM cache every day at 2 am. Each day at 2 am, the script is executed, flushing your RAM cache. However, one day, unexpectedly high numbers of users are online on your website, placing a significant demand on your server resources.

Simultaneously, the scheduled script runs and clears everything in the cache. Now, all users are retrieving data from the disk, potentially leading to a server crash and database corruption. Therefore, it’s essential to clear the RAM cache only when necessary, being mindful of your actions. Otherwise, you risk becoming a Cargo Cult System Administrator.

Conclusion

Efficient memory management is crucial for a smoothly running Linux system. Regularly clearing the RAM memory cache, buffer, and swap space can significantly enhance system performance. By understanding these mechanisms and employing the provided commands, you can keep your Linux system running at its best.

That’s all for now. If you enjoyed the article, please remember to share your valuable feedback in the comments. Let us know your thoughts on what you believe is a good approach for clearing the RAM cache and buffer in production and enterprise environments.

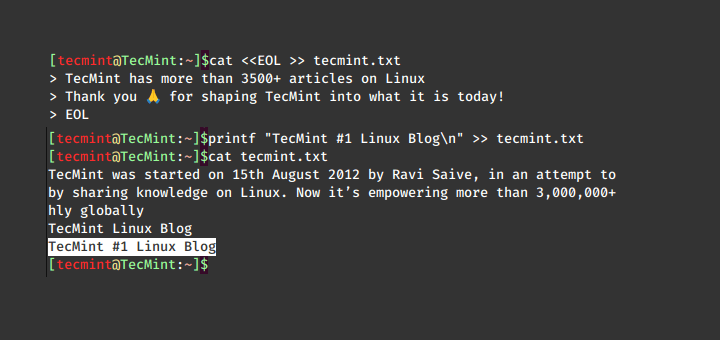

I use:

# echo 3 > /proc/sys/vm/drop_caches && swapoff -a && swapon -a && printf ‘\n%s\n’ ‘Ram-cache and Swap Cleared’

(Note: dphys-swapfile swapon)

Second: It also kills zram so you need to (re)start zram again.

It will result in a server crash and corrupt the database.

Can you please explain this? Why clearing cache could crash the server and corrupt the database?

Thanks

The following command, giving me permission denied, even with sudo:

Same here. You first need to drop into a root shell (hence the “#” in the article) via “sudo su”, and only then will it work.

$ sync; echo 1 | sudo tee /proc/sys/vm/drop_caches

This is only really useful for benchmarking and flushing things after upsetting the usual pattern.

For instance, if you and a bunch of co-workers have been reviewing and refreshing a 12GB log file in production, then flushing the caches might make sense so then the system will cache more useful data.

Apart from that, Linux will take care of things by itself.

It could also be useful for cases where garbage collection isn’t functioning properly in certain apps, and the RAM isn’t reclaimed on time, as I had found out on my system.

I want to ask? is it safe to clean cached memory on a Linux mail server, will this process interfere with sending/receiving emails on the user?

@Ahmad,

Yes, it is safe to remove cached memory buffer to free up memory space…

The reason to drop caches like this is for benchmarking disk performance and is the only reason it exists.

When running an I/O-intensive benchmark, you want to be sure that the various settings you try are all actually doing disk I/O, so Linux allows you to drop caches rather than do a full reboot.

https://serverfault.com/questions/597115/why-drop-caches-in-linuxThank you.

What is this with echo? echo “echo 3 > /proc/sys/vm/drop_caches“. You should just use echo 3 > /proc/sys/vm/drop_caches, or echo 3 | sudo tee /proc/sys/vm/drop_caches.

The remark that ‘3’ should not be used in production systems is ridiculous and invalid. It is fully supported and stable for decades. drop_caches is usually only useful when doing benchmarks and timing tests of file systems and block devices, or network attached storage or file systems. You shouldn’t use any drop_cache in the first place in any real production system. It is only for testing and debugging.

echoing the echoed echo, echo.

Exactly, Witold. I second you. The author of this article “heard the song but got it wrong”.

It may be necessary to go back to normal operation after this settings. See the following document from kernel.org.

Returning system back to normal requires user to write “4” into drop_caches

https://www.kernel.org/doc/Documentation/sysctl/vm.txt

Zeki, no there is no need to write 4. Documentation says it clearly. 4 (bit 2), is only to disable the dmesg / kernel log messages when the drop_caches is issued. writing 1, 2 or 3 is one time thing, and immediately after (or even during) the page cache and other caches will start to be populated back according to system usage.

I hardly see to ever need to use 4. It might be useful if you do it like every few minutes for some strange reasons, and want to avoid polluting the kernel log (dmesg) by spam of it. But it is just one line, and if you do it sporadically, there is zero reason to use 4.

You can’t use “echo 4” to release memories, because centos system just has 1, 2, or 3 options.

This is on a personal device that I’m only running one app on that’s not critical but want to make sure the performance is as good as it can be.

The command to clear the caches doesn’t run until I add sudo. I get access denied without it. Would this be proper?

sync; echo 3 > sudo /proc/sys/vm/drop_caches@Steve,

Sometimes you need superuser privileges to run commands..Nothing to worry..

What I’m asking is WHERE I put the sudo command because it didn’t work in front of sync or echo and I didn’t realize drop-caches was an executable command that would respond to sudo. It seems to work there but I’m just making sure that’s a proper use of sudo in that syntax.

Actually found something that seems to work much better than what I last tried:

sync; sudo sh -c "echo 3 > /proc/sys/vm/drop_caches"The buff/cache column on

free -hdropped from 340MB to 97MB and the free column went from 78MB to 217MB. Bigger difference than before.su -c "echo 3 >'/proc/sys/vm/drop_caches' && swapoff -a && swapon -a && printf '\n%s\n' 'Ram-cache and Swap Cleared'" rootIs there a password for this after hitting enter ??? if not why does it ask me?

and could this be applied for the home desktops too ???

You might want to correct

to

When are we going to stop talking about cron, and start talking about systemd timers? It’s time, guys.

Well, not everybody is in love with systemd.

If you are in love with systemd OK go ahead and use timers but don’t pretend that every body is agree with you.

Exactly! I literally went out of my way to comment specifically on this. Why does systemd even need a timer? It’s _supposed_ to be an init system.

When are we going to stop talking about systemd and move to openRC? It’s time, guys. Long, long past time in fact.

What is the tool you used to generate the Screenshot Gif

To generate screenshot animated GIFs, have a look at ‘”peek”, available from the GitHub repository.

How can i create swap size in already running server?

Hope this article will help you out – How To Create a Linux Swap File

This worked a treat for me.. thanks guys

Bizarre. Am the only one for whom none of the listed commands works? Is this space between the terms?

Because if I copy the first command, either: “sync; echo 1> / proc / sys / vm / drop_caches ”

I get: “bash: /: is a folder” and that’s it!

Thank you for correcting me.

Sincerely, Dan.

Definitely no spaces after first forward slash. I would probably put a space between 1> , but not sure it is required.

echo

"echo 3 > /proc/sys/vm/drop_caches"not sure about this commandBefore going to shut down or reboot production server , what are the required steps before to perform. Could you please explain with commands with examples.

Very useful… Thank you very much!!

Quote from the Article:

> At the same time scheduled script run and clears everything in cache.

> Now all the user are fetching data from disk. It will result in server crash and corrupt the database.

WHAT??? If this were a true statement, then you would get server crashes and corrupted databases upon first boot or reboot of the server. This obviously is not the case. Worst case you’ll have increased response times, or server timeouts. This untrue statement unfortunately detracts from an otherwise informative article.

How can I stop or remove the echo 1 because its running

I think restart your device will do so.

Hi,

When i try to create

.shfile with given contents, its showing error as bash: ./clearcacahe.sh: /bin/bash^M: bad interpreter: No such file or directoryUse file format use command dos2unix as above file is in DOS format.

@Mandar,

Seems like you have a dos line ending file. The clue is the

^M.You need to save the file using Unix line endings, for example – open your script with vi/vim editor and then hit (key

ESC) and type this:If you have a dos2unix command line program that will also do this for you.

Thanks Ravi for your help

Thanks to provide such a great article.

I am having one doubt about

echo 3if I use"echo 3"in my production environment to clean buffer cache. Any impact of above command in os RAM ? Will it delete useful data of physical RAM ?@Mdsameer,

It will not delete any data on the drive, just clears the cache or buffer cache from the RAM that’s it.

Thanks !

but only clear it when the load is very low like 4am etc. I do it every Sunday.

Gernally I used to clear cache in business hour.

Thanks

Hello, i have centos 7.4 with cpanel 68.0.27 at my server (with HD drive).

I see at “/var/log/cron” the command “sync; echo 3 > /proc/sys/vm/drop_caches” run every hour.

How can i change it?

I check it with “crontab -e” but i didn’t find there…

@Tanasis,

Must be cron set for that command, please check all contab entries and files carefully, like cron.hourly file under /etc directory.

I found it at /etc/cron.d/sync.

I asked hosting company and suggest me to change the “echo 3 > …” line to “echo 1 > ..” in order to only free the page cache instead of also freeing dentries and inodes.

Before i removed the “echo 3 > …” i checked my RAM. It had:

After 5 hours i have (i had removed “echo 3 > …“) :

Am I OK, What do you suggest?

@Tenasis,

Yes, it’s now perfect the command now only erasing page cache and I think which is fine for you..

Ravi, i didn’t change the “echo 3” to “echo 1”.

I had removed it…

So,

– the memory status when i had the “echo 3” was

Mem30717040 total, 25,244,176 free, 2631884 used, 2840980 buff/cache

After half day

– the memory status without any “echo x” was

Mem : 30717040 total, 244400 free, 2790844 used, 27681796 buff/cache

The swap was 0

It is OK?

Shall i have to set “echo 1” every hour or leave is without “echo xxx” ?

@Tanasis,

For now, leave it as it is, and monitor for 2-3 days and see how it works, if it’s clear page cache every hour that’s fine else set echo with option.

I tried with “echo 1 > ..”

I have high CPU for 2-3 minutes because cron clear the buff/cache.

I think, I will set “echo 3 > ..” every 4:00am.

When i didn’t have the “echo” my free RAM was low (244400 free, 2790844 used, 27681796 buff/cache), but buff/cache was high and the swap was zero. Also avail Mem was high too.

@Tanasis,

It’s because I think the system in process of deleting Buffer/Cache from the system, during the process may be some RAM is used, once the cache is cleared all comes to normal..

I think Linux was hungry so that ate the RAM..:)

Thanks for your useful posts.

Unfortunately your web is not available in my country (Iran), so we have to use proxy for reading your great posts.

@Ali,

Could you tell us, what error you getting while accessing our website? it will be more helpful us to find why the site is getting blocked..

@Stephanie

Yes, very useful.

You are getting permission denied because you are running sudo sync, semicolon, this means followed by the echo 1. So you’re running echo not as sudo anymore.

How about a sudo -i followed by the command.

I used ‘sync; echo 3 > /proc/sys/vm/drop_caches‘ in my Linux (RHEL5) and taking more time to execute and come out, also i can see in top command bash process utilization is high of cpu.

What to do next in this case or shall i kill the process ? can some one help me on it.

Hi there, I am working on my site, hence I have been given this code to clear the cache /serverscripts/clear_cache.sh and now the problem is whenever there is a new article published on site the new article doesn’t appear on site unless I go to putty and go with that command /serverscripts/clear_cache.sh

so please help me in this, as used some cache plugin but didn’t worked well even some plugin loaded my site RAM, so help me!

Not useful.

“permission denied”

Even when root or using sudo.

On my Ubuntu 16.04 only the root user can write to /proc/sys/vm/drop_caches and a sudoer user cannot.

On top of that, even the root user cannot grant write permission on this file.

I ran into the same issue, you can sudo a shell command and it should work (does for me Ubuntu 16.04 and Bodhi).

E.g:

When you talk about clearing swap space you say after considering the risk.

Can you please elaborate more what are the risks in clearing swap space?

this helped me a lot.. Great work dude !!

Frankly I am amazed. Above you wrote up the one reason why anyone would do such a thing as cleaning the cache on Linux: testing – especially benchmarking. Then you go ahead and explain how to set up a cron job that cleans the cache every night.

what is the point of that? Any newbie reading this will think that cleaning the cache (or even reconnecting the swap partition) is a good thing to do for administration purposes, like you would do when you clean the disk cache for Internet Explorer on a Windows machine.

It isn’t. The explanation why it is not is in your article, but the way how it is mentioned embedded in instructions on how to do it anyway seems to be misleading to newbies so please allow me to explain.

Yes, there are some applications around that hog memory so bad that the system memory may be eaten up and the system starts migrating memory pages onto the swap partition. Firefox comes to mind as it can become a problem when running with only 2GB of system memory.

Even if you close tabs of especially memory hungry web pages (ebay is a really bad offender here) not all the code in memory will be released as it should be. Keep in mind here that this is a problem of the application and not Linux though. This means you won’t get that memory back by fiddling with the os, like dropping the cache anyway. The intervention required would be to do something about Firefox.

The only way I know of to get the memory back is to terminate the offending process i.e. Firefox. A notable exception to this are databases that can seem to hog memory if they are not properly configured (opposed to poor memory management within the application) but even then you’ll need to look at your database first (while keeping in mind that ‘Database Administrator’ is a job description for a reason. Whatever you do, purging the cache won’t help).

So yes, what I am saying is that the preposition in the second sentence of this article is false. If you have a process that is eating up your memory then purging the cache won’t even touch it, while the process is running.

Terminating the process will release the memory. Sometimes you can even observe how the kernel decides to discard most of the memory claimed by such a terminated process itself, i.e. it doesn’t even keep it in the cache.

If the process claimed enough memory, it may have displaced a lot of essential code from the memory into the swap space causing the computer to run slower for a little while longer until that memory code is retrieved. Now if you are on your desktop at home you may want to follow the instructions above and say ‘swapoff -a && swapon -a‘ and get a cup of tea and when you are back your computer will be fast again.

If you don’t like tea you may just want to continue what you have been doing without reconnecting your swap as it probably won’t take long for the memory to migrate back anyway. NOT reconnecting swap will have the advantage that only the code that is actually needed will be placed back into memory (my preferred choice). So: reconnecting swap will consume more system resources overall than letting the kernel deal with it.

Do not reconnect swap on a live production system unless you really think you know what you are doing. But then I shouldn’t have to say this as you would find out about this anyway while doing your research / testing as you should when doing this kind of stuff on a live production system.

Here is another thought. Maybe the cache-drop fallacy comes from the way memory usage is traditionally accounted for on Linux systems. Par example if you open ‘top‘ in a terminal and look at the row where it says ‘Mem‘, there are entries ‘free‘ and ‘used‘ memory.

Now the stats for used memory always includes the memory used for caching and buffering. The free memory is the memory that is not used at all. So if you want to know the memory used for os and applications subtract buffer and cache values from the used memory and you’ll get the footprint of all the residual memory used for applications.

If you don’t know that and only looked at the amount of free memory you may have thought you were actually running out of physical memory, but as long as there is plenty of memory used by the cache this is not true. If you drop the cache as described above, top will report all that memory as free memory but this is really not what you thought you wanted – unless you are testing or benchmarking (see Ole Tanges post here for an example).

Now the policy of the Linux kernel is to use as much of the memory as it can for something useful. First priority obviously goes to os / application code. All the rest is used for buffer/cache (more on that here: http://stackoverflow.com/questions/6345020/linux-memory-buffer-vs-cache).

It’s written above in the article but I’ll say it here again: the data in the cache are copies of files stored on your main drive. It’s kept there just in case it’s needed again, so it’s there a lot quicker than having to read it from the drive again.

This is good and you want to keep it that way on any kind of Linux installation. Unless you are testing (in a test environment). Or just playing around and learning something new, and for that your article is brilliant!

P.S.: I noticed some people here trying to flush the cache while they are obviously having problems with limited memory. As I said before this is something to look at at application level rather than os level.

A good first step at finding out what is causing the memory bottleneck is to use ‘top‘. Enter this at the command line and press Shift+m (or M if you like). This will sort the list of processes running on your system by their residual memory footprint. The column you need to look at is ‘RES‘ for residual memory (that is memory actually allocated within the virtual memory space).

You’ll soon see which process is causing most problems. There is not one answer to what to do next. A process like Firefox may be restarted. If the memory problem is caused by virtual machines the host memory is probably over committed, i.e. the combined allocated memory of all vms exceeds the total amount of physical memory on the host or at least doesn’t leave it any space to run itself.

If this is a real problem on your box you could try to reduce the allocatable memory for each vm. If that is not an option the cheapest and easiest way to solve this problem is usually to stick some more memory into the box (if running KVM, a good start is reading this: http://www.linux-kvm.org/page/Memory).

It could be the application you are running has a bug in memory management (try up or downgrading, consider filing a bug report). It could be the application just requires more memory than you have installed (image/video editing apps come to mind, where the amount of required memory depends on the size of the files you are working on).

Again, if this is an ongoing issue you won’t get around upgrading your memory. Enterprise grade databases Oracle etc. are harder to advise on here. As they can do their own cache management you won’t necessarily see what’s really going on with top and just throwing ‘more tin’ at it, i.e. just installing more memory may do only very little difference.

Read an introduction about profiling for the specific db you are running, if you don’t have one already: set up a test machine (with hardware as similar as reasonably possible to the production one), copy the configuration and data set from your production box over and set up test scenarios that hopefully replicate some of the peak use cases and take it from there. Distributed apps like apache, enterprise grade accounting software, you name it have their own specific requirements.

Whatever else you do, look at the documentation of the app.

Once you understand what’s going on there are a few (advanced) things that can be done on os level. One example is setting up cgroups to control the ‘swappiness‘ of certain sets of applications (read http://unix.stackexchange.com/questions/10214/how-to-set-per-process-swapiness-for-linux/10227#10227).

If you consider setting this up on a production system you do want to set it up in a test environment first and make sure it does what you want it to do. You have been warned.

Hey there,

Is it okay for SSD server to clear pagecache only on hourly cron?

Thanks

Clearing the cache is definitely useless..

The cache you sees there is just direct memory content of disk files ( ext2-3-4 basic speed enhancement of accesses ). The goal is to enhance the access to common used files WHEN memory is available ( RAM not used ).

So, Linux automatically release this “cache” when a process need memory, what you do by “resetting” the cache content is to remove those files content inside the ram and ask your system to use the disk content instead ( you know that disk accesses are slower and then your application’s performances will be less effective, it’s for performances that Linux EXT FS does so )

Then for me this is useless ( is automatically managed by the OS ) and even could lead to performances issues on high load applications …

Hey I have working servers on VM’s but after some days it got slow. So I have to reboot every 7 days or whenever we face error..

There is no user login after 11-am so can I use eco 3? with ram and swap clearing on and off ?

@Prashant,

Yes, you can use echo 3 command to clear your RAM cache and buffer to free up some space to function server properly..

@Ravi

i tried and checked and worked fine echo but some VMs start using Swap memory i.e “swapoff -a && swapon -a” gave some error regarding volume group.

I will post that when i will get same error and any way thanks cause mainly i use echo 1 but still i need some vms to restart.

lets see if this echo 3 can fix that issue

This is not working fine for us. So could you provide some alternate for this.

When you would use this:

When measuring performance it can be important to do that in a reproducible way. Caches can often mess up these results.

So one of the situations where you would drop all caches, is if you have more ways to do the same thing, and are trying to figure out which way is the fastest:

Very clear explanation. Thank you.

Oh, that was fun. I like getting 10GB of RAM back in one command…!

Are you sure that it can corrupt the database? I think that database can be pretty slow but no file should be corrupted.

@Pavel,

No it will not corrupt database, its just clears the Cache and Buffer in Linux.

Hi Avishek,

Great article. Just a little correction maybe on the crontab entry. Is it really 2pm? Cheers! :)

@Viril,

Thanks for pointing out, yes that was typo it should be 2pm, corrected in the writeup..

It should be 2am and not 2pm.

@Pavel,

Thanks for notifying, yes it is 2am, corrected in the writeup..

Unfortunately swapoff -a && swapon -a doesn’t work for me. I know some tricks but I can’t clear swap completely.

sync command is not needed, it internally synch’s the dirty pages.

Great articles. Very usefull.

Many thanks.

when I wan manipulate proc folder my system was crash

what can I do

my is is Ubuntu mate 14.10

thanks a lot for this benefit post

I am sorry Kamrad1372,

but i could not understand you.

Will be be a little more clear.

Also post your Input and Output, very Clearly.

nothing happen my system stop working when edit process folder I don’t know

Excuse me, but why would anyone want to clear the caches? That makes no sense at all.

Okay, you do mention system tests – benchmarking, I guess? This is actually the *only* use case I can think of. Otherwise you shouldn’t do this!

Dear Mr. Evil,

As already suggested by me in the post itself, that ram-cache cleaning is not a good idea and one should take extra-precaution specially in production. And yes i mentioned I/O benchmarking when this can be very useful. For most of the other thing Linux Kernel Memory Management is intelligent enough.

Nice post, I searched some months ago about that topic but didnt find any helpful stuff. Now you cleared some things up for me. Great! Hope a lot of good articles are in queue :-)

Tecmint is the best site Ive found so far!

Dear Kay,

I feel happy to help you specially when you were searching for this, very recently. Keep Connected for more such posts.

Thanks for the compliment by-the-way.

Hello ,, article is good and useful .. When i have to execute/use the following command? May be if system is hanged state or at what instance i have to use this commands ..please let me know .Thank you

on a general system you probably don’t need it. and it is strictly not recommended in production. If you are dealing with I/O benchmark-ing, this is for you. I would suggest you to go through the post once again.

thanks your post, it’s helpful for me

Welcome ccame,

Keep Connected for more such posts.

# LANG=C echo 1 > /proc/sys/vm/drop_cache

bash: /proc/sys/vm/drop_cache: No such file or directory

# uname -r

4.0.4-303.fc22.i686

Fedora 22.

Ooops, sorry, echo 1 > /proc/sys/vm/drop_caches works :)

The first 3 exampleas are incorrect – /proc/sys/vm/drop_cache instead of /proc/sys/vm/drop_caches

@Nerijus,

Sorry for that mistake, we’ve corrected in the write up..

I am having some issues you will find the commands and error messages:

david@david-XFX-nForce-780i-3-Way-SLI:~$ sync; echo 3 > /proc/sys/vm/drop_cache

bash: /proc/sys/vm/drop_cache: No such file or directory

david@david-XFX-nForce-780i-3-Way-SLI:~$ echo 3 > /proc/sys/vm/drop_caches && swapoff -a && swapon -a && printf ‘\n%s\n’ ‘Ram-cache and Swap Cleared’

bash: /proc/sys/vm/drop_caches: Permission denied

david@david-XFX-nForce-780i-3-Way-SLI:~$ su -c ‘echo 3 >/proc/sys/vm/drop_caches’ && swapoff -a && swapon -a && printf ‘\n%s\n’ ‘Ram-cache and Swap Cleared’

Password:

su: Authentication failure

david@david-XFX-nForce-780i-3-Way-SLI:~$ sudo -c ‘echo 3 >/proc/sys/vm/drop_caches’ && swapoff -a && swapon -a && printf ‘\n%s\n’ ‘Ram-cache and Swap Cleared’

usage: sudo -h | -K | -k | -V

usage: sudo -v [-AknS] [-g group] [-h host] [-p prompt] [-u user]

usage: sudo -l [-AknS] [-g group] [-h host] [-p prompt] [-U user] [-u user] [command]

usage: sudo [-AbEHknPS] [-r role] [-t type] [-C num] [-g group] [-h host] [-p prompt] [-u

user] [VAR=value] [-i|-s] []

usage: sudo -e [-AknS] [-r role] [-t type] [-C num] [-g group] [-h host] [-p prompt] [-u

user] file …

david@david-XFX-nForce-780i-3-Way-SLI:~$

I am not sure what I am doing wrong, but any help would be much appreciated.

Dear David Armstrong,

I apologize for the inconvenience. Actually it was an error on my side.

The commands should be

$ sync; echo 3 > /proc/sys/vm/drop_caches

Notice it is drop_caches and not drop_cache

for the next part

# echo 3 > /proc/sys/vm/drop_caches && swapoff -a && swapon -a && printf ‘\n%s\n’ ‘Ram-cache and Swap Cleared’

This should essentially be run as root and not user. User don’t have permission to edit file – /proc/sys/vm/drop_caches

Also check the article for the updated third command. All your problem should be fixed now.