When a system administrator wants to increase the bandwidth available and provide redundancy and load balancing for data transfers, a kernel feature known as network bonding allows to get the job done in a cost-effective way.

Read more about how to increase or bandwidth throttling in Linux

How to Limit the Network Bandwidth Used by Applications in a Linux with Tricklehttp://t.co/It2ccJeAih

Via @tecmint pic.twitter.com/nzKwF3ec2O— TecMint.com (@tecmint) September 17, 2015

In simple words, bonding means aggregating two or more physical network interfaces (called slaves) into a single, logical one (called master). If a specific NIC (Network Interface Card) experiences a problem, communications are not affected significantly as long as the other(s) remain active.

Read more about network bonding in Linux systems here:

- Network Teaming or NiC Bondin in RHEL/CentOS 6/5

- Network NIC Bonding or Teaming on Debian based Systems

- How to Configure Network Bonding or Teaming in Ubuntu

Enabling and Configuring Network Bonding or Teaming

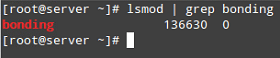

By default, the bonding kernel module is not enabled. Thus, we will need to load it and ensure it is persistent across boots. When used with the --first-time option, modprobe will alert us if loading the module fails:

# modprobe --first-time bonding

The above command will load the bonding module for the current session. In order to ensure persistency, create a .conf file inside /etc/modules-load.d with a descriptive name, such as /etc/modules-load.d/bonding.conf:

# echo "# Load the bonding kernel module at boot" > /etc/modules-load.d/bonding.conf # echo "bonding" >> /etc/modules-load.d/bonding.conf

Now reboot your server and once it restarts, make sure the bonding module is loaded automatically, as seen in Fig. 1:

In this article we will use 3 interfaces (enp0s3, enp0s8, and enp0s9) to create a bond, named conveniently bond0.

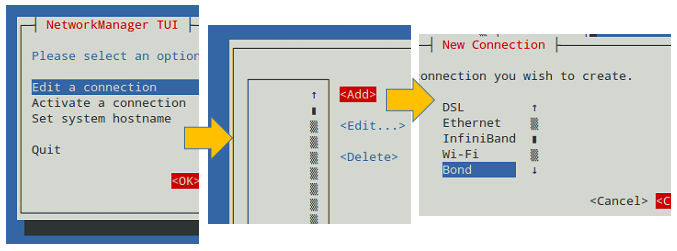

To create bond0, we can either use nmtui, the text interface for controlling NetworkManager. When invoked without arguments from the command line, nmtui brings up a text interface that allows you to edit an existing connection, activate a connection, or set the system hostname.

Choose Edit connection –> Add –> Bond as illustrated in Fig. 2:

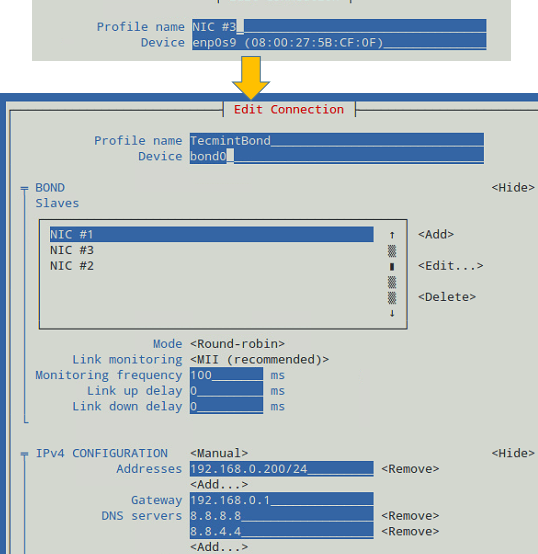

In the Edit Connection screen, add the slave interfaces (enp0s3, enp0s8, and enp0s9 in our case) and give them a descriptive (Profile) name (for example, NIC #1, NIC #2, and NIC #3, respectively).

In addition, you will need to set a name and device for the bond (TecmintBond and bond0 in Fig. 3, respectively) and an IP address for bond0, enter a gateway address, and the IPs of DNS servers.

Note that you do not need to enter the MAC address of each interface since nmtui will do that for you. You can leave all other settings as default. See Fig. 3 for more details.

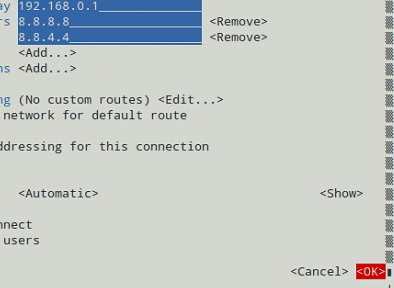

When you’re done, go to the bottom of the screen and choose OK (see Fig. 4):

And you’re done. Now you can exit the text interface and return to the command line, where you will enable the newly created interface using ip command:

# ip link set dev bond0 up

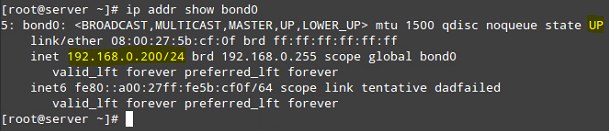

After that, you can see that bond0 is UP and is assigned 192.168.0.200, as seen in Fig. 5:

# ip addr show bond0

Testing Network Bonding or Teaming in Linux

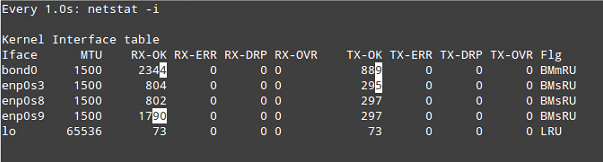

To verify that bond0 actually works, you can either ping its IP address from another machine, or what’s even better, watch the kernel interface table in real time (well, the refresh time in seconds is given by the -n option) to see how network traffic is distributed between the three network interfaces, as shown in Fig. 6.

The -d option is used to highlight changes when they occur:

# watch -d -n1 netstat -i

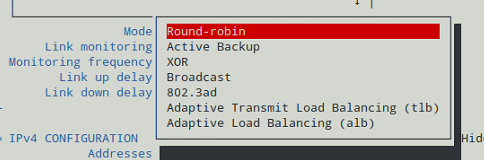

It is important to note that there are several bonding modes, each with its distinguishing characteristics. They are documented in section 4.5 of the Red Hat Enterprise Linux 7 Network Administration guide. Depending on your needs, you will choose one or the other.

In our current setup, we chose the Round-robin mode (see Fig. 3), which ensures packets are transmitted beginning with the first slave in sequential order, ending with the last slave, and starting with the first again.

The Round-robin alternative is also called mode 0, and provides load balancing and fault tolerance. To change the bonding mode, you can use nmtui as explained before (see also Fig. 7):

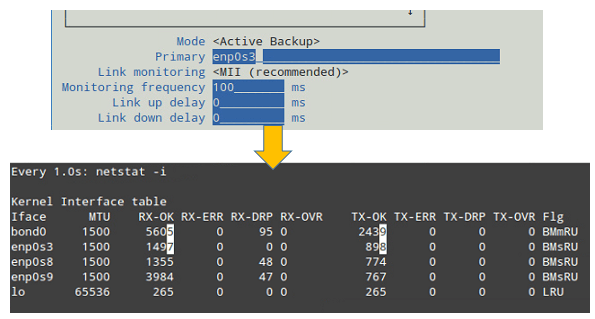

If we change it to Active Backup, we will be prompted to choose a slave that will the only one active interface at a given time. If such card fails, one of the remaining slaves will take its place and becomes active.

Let’s choose enp0s3 to be the primary slave, bring bond0 down and up again, restart the network, and display the kernel interface table (see Fig. 8).

Note how data transfers (TX-OK and RX-OK) are now being made over enp0s3 only:

# ip link set dev bond0 down # ip link set dev bond0 up # systemctl restart network

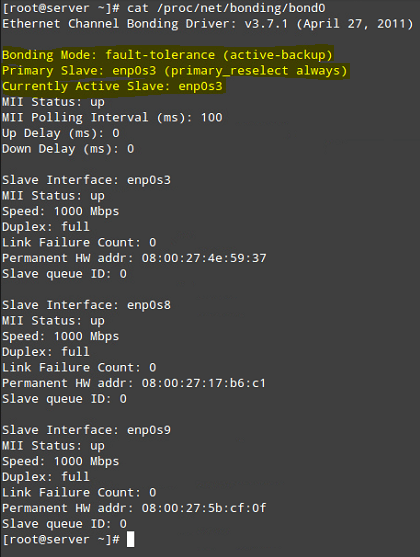

Alternatively, you can view the bond as the kernel sees it (see Fig. 9):

# cat /proc/net/bonding/bond0

Summary

In this chapter we have discussed how to set up and configure bonding in Red Hat Enterprise Linux 7 (also works on CentOS 7 and Fedora 22+) in order to increase bandwidth along with load balancing and redundancy for data transfers.

As you take the time to explore other bonding modes, you will come to master the concepts and practice related with this topic of the certification.

If you have questions about this article, or suggestions to share with the rest of the community, feel free to let us know using the comment form below.

I got some good info form this article. But I do have one question. After figure 1 you state, “To create bond0, we can either use nmtui“. Either implies there is another option. I’m looking for another way to configure bonding w/o using nmtui or nmcli. Did you have another option you were going to mention?

@David,

You can use nmtui or nmcli tool to create network bonding..

Thank you for this guide.

Hello. I noticed a serious problem that needs your attention: How to configure automatic recovery of a failed port in RHEL7.0 teaming ? I successfully configured teaming (active backup) by following a similar procedure, in a RHEL 7.0 server.

The failover from port1 to port2 (from main to backup) was also successful by just applying the proper command, but when I tried to failover again from port2 to port1 (from backup to main), it was not possible.

I found port1 still in a failed state (down) and I had to recover it up manually. This is a big problem because if the backup (port2) also fails, the network will remain in a failed state and never recovers.

So is there a solution to this problem: a command to (try to) immediately recover (stimulate) a failed port after a failover, so that it becomes soon ready to switch back to main (active) again if/when necessary. This means the config must always try keeping both ports UP so that failover never fails. Thanks.

Great article. However, when a server reboots, the

bond0link doesn’t come up because there’s a bug in centos7 that doesn’t start up the bond0 interface automatically.To resolve my issue, I added this command below which stated above to the “/etc/rc.d/rc.local” and then run “chmod +x /etc/rc.d/rc.local” to ensure that this script will be executed during boot. Reason being that /etc/rc.local or /etc/rc.d/rc.local are no longer executed by default due to systemd-changes. To still use those, you need to make /etc/rc.d/rc.local executable.

So again,

1. Open this file /etc/rc.d/rc.local

2. Add “ip link set dev bond0 up” to it (bond0 is your bonding interface or whatever it was named as).

3. Save and run “chmod +x /etc/rc.d/rc.local“

How can we do the same non-interactively (add within script)? Is there a nmcli or something?

@Kaustubh,

If you need to implement network bonding using a script I would just work with the interfaces configuration files. Let us know if you need help to do that.

Bonding non-intercatively: https://www.lisenet.com/2016/configure-aggregated-network-links-on-rhel-7-bonding-and-teaming/

Mainly using nmcli, hope it helps.