In this article, we will review the wget utility which retrieves files from World Wide Web (WWW) using widely used protocols like HTTP, HTTPS, FTP, and FTPS.

Wget is a free command-line utility and network file downloader, which comes with many features that make file downloads easy, including:

- Download large files or mirror complete web or FTP sites.

- Download multiple files at once.

- Set bandwidth and speed limit for downloads.

- Download files through proxies.

- Can resume aborted downloads.

- Recursively mirror directories.

- Runs on most UNIX-like operating systems as well as Windows.

- Unattended / background operation.

- Support for persistent HTTP connections.

- Support for SSL/TLS for encrypted downloads using the OpenSSL or GnuTLS library.

- Support for IPv4 and IPv6 downloads.

Table of Contents

Wget Command Syntax

The basic syntax of Wget is:

$ wget [option] [URL]

First, check whether the wget utility is already installed or not in your Linux box, using the following command.

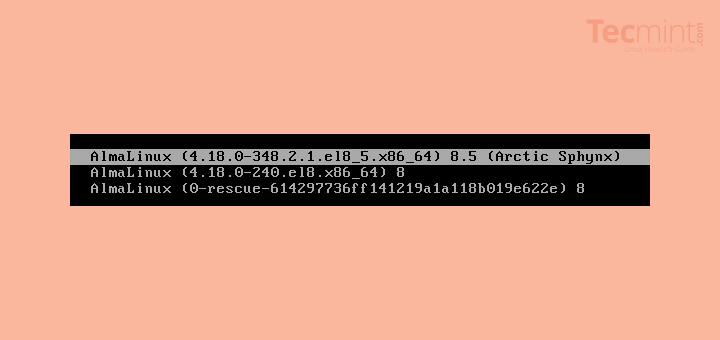

$ rpm -q wget [On RHEL/CentOS/Fedora and Rocky Linux/AlmaLinux] $ dpkg -l | grep wget [On Debian, Ubuntu and Mint]

Install Wget on Linux

If Wget is not installed, you can install it using your Linux system’s default package manager as shown.

$ sudo apt install wget -y [On Debian, Ubuntu and Mint] $ sudo yum install wget -y [On RHEL/CentOS/Fedora and Rocky Linux/AlmaLinux] $ sudo emerge -a net-misc/wget [On Gentoo Linux] $ sudo pacman -Sy wget [On Arch Linux] $ sudo zypper install wget [On OpenSUSE]

The -y option used here is to prevent confirmation prompts before installing any package. For more YUM and APT command examples and options read our articles on:

- 20 Linux YUM Commands for Package Management

- 15 APT Command Examples in Ubuntu/Debian & Mint

- 45 Zypper Command Examples to Manage OpenSUSE Linux

1. Download a File with Wget

The command will download a single file and store it in a current directory. It also shows download progress, size, date, and time while downloading.

# wget http://ftp.gnu.org/gnu/wget/wget2-2.0.0.tar.gz --2021-12-10 04:15:16-- http://ftp.gnu.org/gnu/wget/wget2-2.0.0.tar.gz Resolving ftp.gnu.org (ftp.gnu.org)... 209.51.188.20, 2001:470:142:3::b Connecting to ftp.gnu.org (ftp.gnu.org)|209.51.188.20|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 3565643 (3.4M) [application/x-gzip] Saving to: ‘wget2-2.0.0.tar.gz’ wget2-2.0.0.tar.gz 100%[==========>] 3.40M 2.31MB/s in 1.5s 2021-12-10 04:15:18 (2.31 MB/s) - ‘wget2-2.0.0.tar.gz’ saved [3565643/3565643]

2. Wget Download File with a Different Name

Using -O (uppercase) option, downloads files with different file names. Here we have given the wget.zip file name as shown below.

# wget -O wget.zip http://ftp.gnu.org/gnu/wget/wget2-2.0.0.tar.gz --2021-12-10 04:20:19-- http://ftp.gnu.org/gnu/wget/wget-1.5.3.tar.gz Resolving ftp.gnu.org (ftp.gnu.org)... 209.51.188.20, 2001:470:142:3::b Connecting to ftp.gnu.org (ftp.gnu.org)|209.51.188.20|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 446966 (436K) [application/x-gzip] Saving to: ‘wget.zip’ wget.zip 100%[===================>] 436.49K 510KB/s in 0.9s 2021-12-10 04:20:21 (510 KB/s) - ‘wget.zip’ saved [446966/446966]

3. Wget Download Multiple Files with HTTP and FTP Protocol

Here we see how to download multiple files using HTTP and FTP protocol with the wget command simultaneously.

$ wget http://ftp.gnu.org/gnu/wget/wget2-2.0.0.tar.gz ftp://ftp.gnu.org/gnu/wget/wget2-2.0.0.tar.gz.sig --2021-12-10 06:45:17-- http://ftp.gnu.org/gnu/wget/wget2-2.0.0.tar.gz Resolving ftp.gnu.org (ftp.gnu.org)... 209.51.188.20, 2001:470:142:3::b Connecting to ftp.gnu.org (ftp.gnu.org)|209.51.188.20|:80... connected. HTTP request sent, awaiting response... 200 OK Length: 3565643 (3.4M) [application/x-gzip] Saving to: ‘wget2-2.0.0.tar.gz’ wget2-2.0.0.tar.gz 100%[==========>] 4.40M 4.31MB/s in 1.1s 2021-12-10 06:46:10 (2.31 MB/s) - ‘wget2-2.0.0.tar.gz’ saved [3565643/3565643]

4. Wget Download Multiple Files From a File

To download multiple files at once, use the -i option with the location of the file that contains the list of URLs to be downloaded. Each URL needs to be added on a separate line as shown.

For example, the following file ‘download-linux.txt‘ file contains the list of URLs to be downloaded.

# cat download-linux.txt https://releases.ubuntu.com/20.04.3/ubuntu-20.04.3-desktop-amd64.iso https://download.rockylinux.org/pub/rocky/8/isos/x86_64/Rocky-8.5-x86_64-dvd1.iso https://cdimage.debian.org/debian-cd/current/amd64/iso-dvd/debian-11.2.0-amd64-DVD-1.iso

# wget -i download-linux.txt --2021-12-10 04:52:40-- https://releases.ubuntu.com/20.04.3/ubuntu-20.04.3-desktop-amd64.iso Resolving releases.ubuntu.com (releases.ubuntu.com)... 91.189.88.248, 91.189.88.247, 91.189.91.124, ... Connecting to releases.ubuntu.com (releases.ubuntu.com)|91.189.88.248|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 3071934464 (2.9G) [application/x-iso9660-image] Saving to: ‘ubuntu-20.04.3-desktop-amd64.iso’ ubuntu-20.04.3-desktop-amd64 4%[=> ] 137.71M 11.2MB/s eta 3m 30s ...

If your URL list has a particular numbering pattern, you can add curly braces to fetch all the URLs that match the pattern. For example, if you want to download a series of Linux kernels starting from version 5.1.1 to 5.1.15, you can do the following.

$ wget https://mirrors.edge.kernel.org/pub/linux/kernel/v5.x/linux-5.1.{1..15}.tar.gz

--2021-12-10 05:46:59-- https://mirrors.edge.kernel.org/pub/linux/kernel/v5.x/linux-5.1.1.tar.gz

Resolving mirrors.edge.kernel.org (mirrors.edge.kernel.org)... 147.75.95.133, 2604:1380:3000:1500::1

Connecting to mirrors.edge.kernel.org (mirrors.edge.kernel.org)|147.75.95.133|:443... connected.

WARNING: The certificate of ‘mirrors.edge.kernel.org’ is not trusted.

WARNING: The certificate of ‘mirrors.edge.kernel.org’ is not yet activated.

The certificate has not yet been activated

HTTP request sent, awaiting response... 200 OK

Length: 164113671 (157M) [application/x-gzip]

Saving to: ‘linux-5.1.1.tar.gz’

linux-5.1.1.tar.gz 100%[===========>] 156.51M 2.59MB/s in 61s

2021-12-10 05:48:01 (2.57 MB/s) - ‘linux-5.1.1.tar.gz’ saved [164113671/164113671]

--2021-12-10 05:48:01-- https://mirrors.edge.kernel.org/pub/linux/kernel/v5.x/linux-5.1.2.tar.gz

Reusing existing connection to mirrors.edge.kernel.org:443.

HTTP request sent, awaiting response... 200 OK

Length: 164110470 (157M) [application/x-gzip]

Saving to: ‘linux-5.1.2.tar.gz’

linux-5.1.2.tar.gz 19%[===========] 30.57M 2.58MB/s eta 50s

5. Wget Resume Uncompleted Download

In case of big file download, it may happen sometimes to stop download in that case we can resume downloading the same file where it was left off with -c option.

But when you start downloading files without specifying -c an option wget will add .1 extension at the end of the file, considered as a fresh download. So, it’s good practice to add -c switch when you download big files.

# wget -c https://releases.ubuntu.com/20.04.3/ubuntu-20.04.3-desktop-amd64.iso --2021-12-10 05:27:59-- https://releases.ubuntu.com/20.04.3/ubuntu-20.04.3-desktop-amd64.iso Resolving releases.ubuntu.com (releases.ubuntu.com)... 91.189.88.247, 91.189.91.123, 91.189.91.124, ... Connecting to releases.ubuntu.com (releases.ubuntu.com)|91.189.88.247|:443... connected. HTTP request sent, awaiting response... 206 Partial Content Length: 3071934464 (2.9G), 2922987520 (2.7G) remaining [application/x-iso9660-image] Saving to: ‘ubuntu-20.04.3-desktop-amd64.iso’ ubuntu-20.04.3-desktop-amd64.iso 5%[++++++> ] 167.93M 11.1MB/s ^C [root@tecmint ~]# wget -c https://releases.ubuntu.com/20.04.3/ubuntu-20.04.3-desktop-amd64.iso --2021-12-10 05:28:03-- https://releases.ubuntu.com/20.04.3/ubuntu-20.04.3-desktop-amd64.iso Resolving releases.ubuntu.com (releases.ubuntu.com)... 91.189.88.248, 91.189.91.124, 91.189.91.123, ... Connecting to releases.ubuntu.com (releases.ubuntu.com)|91.189.88.248|:443... connected. HTTP request sent, awaiting response... 206 Partial Content Length: 3071934464 (2.9G), 2894266368 (2.7G) remaining [application/x-iso9660-image] Saving to: ‘ubuntu-20.04.3-desktop-amd64.iso’ ubuntu-20.04.3-desktop-amd64.iso 10%[+++++++=====> ] 296.32M 17.2MB/s eta 2m 49s ^

6. Wget Mirror Entire Website

To download or mirror or copy an entire website for offline viewing, you can use use the following command that will make a local copy of the website along with all the assets (JavaScript, CSS, Images).

$ wget --recursive --page-requisites --adjust-extension --span-hosts --convert-links --restrict-file-names=windows --domains yoursite.com --no-parent yoursite.com

Explanation of the above command.

wget \

--recursive \ # Download the whole site.

--page-requisites \ # Get all assets/elements (CSS/JS/images).

--adjust-extension \ # Save files with .html on the end.

--span-hosts \ # Include necessary assets from offsite as well.

--convert-links \ # Update links to still work in the static version.

--restrict-file-names=windows \ # Modify filenames to work in Windows as well.

--domains yoursite.com \ # Do not follow links outside this domain.

--no-parent \ # Don't follow links outside the directory you pass in.

yoursite.com/whatever/path # The URL to download

7. Wget Download Files in Background

With -b option you can send a download in the background immediately after the download starts and logs are written in the wget.log file.

$ wget -b wget.log https://releases.ubuntu.com/20.04.3/ubuntu-20.04.3-desktop-amd64.iso Continuing in background, pid 8999. Output will be written to ‘wget.log’.

8. Wget Set File Download Speed Limits

With option --limit-rate=100k, the download speed limit is restricted to 100k and the logs will be created under wget.log as shown below.

$ wget -c --limit-rate=100k -b wget.log https://releases.ubuntu.com/20.04.3/ubuntu-20.04.3-desktop-amd64.iso Continuing in background, pid 9108. Output will be written to ‘wget-log’.

View the wget.log file and check the download speed of the wget.

$ tail -f wget-log 5600K .......... .......... .......... .......... .......... 0% 104K 8h19m 5650K .......... .......... .......... .......... .......... 0% 103K 8h19m 5700K .......... .......... .......... .......... .......... 0% 105K 8h19m 5750K .......... .......... .......... .......... .......... 0% 104K 8h18m 5800K .......... .......... .......... .......... .......... 0% 104K 8h18m 5850K .......... .......... .......... .......... .......... 0% 105K 8h18m 5900K .......... .......... .......... .......... .......... 0% 103K 8h18m 5950K .......... .......... .......... .......... .......... 0% 105K 8h18m 6000K .......... .......... .......... .......... .......... 0% 69.0K 8h20m 6050K .......... .......... .......... .......... .......... 0% 106K 8h19m 6100K .......... .......... .......... .......... .......... 0% 98.5K 8h20m 6150K .......... .......... .......... .......... .......... 0% 110K 8h19m 6200K .......... .......... .......... .......... .......... 0% 104K 8h19m 6250K .......... .......... .......... .......... .......... 0% 104K 8h19m ...

9. Wget Download Password Protected Files via FTP and HTTP

To download a file from a password-protected FTP server, you can use the options --ftp-user=username and --ftp-password=password as shown.

$ wget --ftp-user=narad --ftp-password=password ftp://ftp.example.com/filename.tar.gz

To download a file from a password-protected HTTP server, you can use the options --http-user=username and --http-password=password as shown.

$ wget --http-user=narad --http-password=password http://http.example.com/filename.tar.gz

10. Wget Ignore SSL Certificate Check

To ignore the SSL certificate check while downloading files over HTTPS, you can use the --no-check-certificate option:

$ wget --no-check-certificate https://mirrors.edge.kernel.org/pub/linux/kernel/v5.x/linux-5.1.1.tar.gz --2021-12-10 06:21:21-- https://mirrors.edge.kernel.org/pub/linux/kernel/v5.x/linux-5.1.1.tar.gz Resolving mirrors.edge.kernel.org (mirrors.edge.kernel.org)... 147.75.95.133, 2604:1380:3000:1500::1 Connecting to mirrors.edge.kernel.org (mirrors.edge.kernel.org)|147.75.95.133|:443... connected. WARNING: The certificate of ‘mirrors.edge.kernel.org’ is not trusted. WARNING: The certificate of ‘mirrors.edge.kernel.org’ is not yet activated. The certificate has not yet been activated HTTP request sent, awaiting response... 200 OK Length: 164113671 (157M) [application/x-gzip] Saving to: ‘linux-5.1.1.tar.gz’ ...

11. Wget Version and Help

With options --version and --help you can view the version and help as needed.

$ wget --version $ wget --help

In this article, we have covered Linux wget commands with options for daily administrative tasks. Do man wget if you want to know more about it? Kindly share through our comment box or if we’ve missed out on anything, do let us know.

Hi,

I need help regarding below, when T try to download website using command

wget --mirror, it is going to ReadLine loop and coming out to complete request, can any one suggest on the same please.private void downLoadReport() { Runtime rt = Runtime.getRuntime(); try { System.out.println("InsideTRY"); Process p = rt.exec(wgetDir + "wget --mirror http://alex.smola.org/drafts/thebook.pdf"); // return result == 0; // Process p = Runtime.getRuntime().exec("wget --mirror // http://askubuntu.com"); System.out.println("Process " + p); BufferedReader reader = new BufferedReader(new InputStreamReader(p.getInputStream())); String line; while ((line = reader.readLine()) != null) { // System.out.println(lineNumber + "\n"); } System.out.println(line + "\n"); System.out.println("Download process is success"); System.out.println("Wget --mirror has been successfully executed...."); } catch (IOException ioe) { System.out.println(ioe); } /* * catch (InterruptedException e) { // TODO Auto-generated catch * block e.printStackTrace(); } */ }everything is ok, but how to find particular software link (google chrome, team viewer etc.)

Great post! Have nice day ! :)

We are facing slowness when we are sending traffic using wget in linux machines through TCL .

Traffic passing gets struck in the middle when the file size is more than 100MB.

Hence we are unable to meassure the bandwidth during download.

Please suggest any way to address this issue.

You made my day. I tried without the ftp:// and failed one time after the other.

It’s due to permission of sending file…change it to 777 and try without ftp:// (it definitely works)

Hi…

I want to make a script to check a website and download the latest available version of a deb file and install it.

The problem I have is that on the website, each time the version changes, so the file name is changed, so I can not know the exact name to give the order to wget.

I wonder if there is any way to include wildcards in wget, or similar option.

As an example, suppose you want to download each x time the latest “Dukto” in 64 bits.

Their website is:

http://download.opensuse.org/repositories/home:/colomboem/xUbuntu_12.04/amd64/dukto_6.0-1_amd64.deb

How i can tell wget to look in that directory and download the dukto*.deb?

Thanks in advance.

what does is mean by “downloads file recursively” ??

That means it will go through all the links on the website. So, for example, if you have a website with links to more websites then it will download each of those and any other links that are in that website. You can set the number of layers, etc (reference http://www.gnu.org/software/wget/manual/html_node/Recursive-Retrieval-Options.html ). This is actually how google works but for the whole internet, it goes through ever link on every website to every other one. Also, if you use some more commands you can actually download a whole site and make it suitable for local browsing so if you have a multipage site that you use often, you could set it recursive and then open it up even without an internet connection. I hope that makes sense(the tl;dr version is that it follows every link on that website to more links and more files in a ‘tree’)

Also, sorry for the late reply(by a few years or so)