The Linux command line, the most adventurous and fascinating part of GNU/Linux, is a very cool and powerful tool. The command line itself is highly productive, and the availability of various built-in and third-party command-line applications makes Linux robust and powerful. The Linux Shell supports a variety of web applications of various kinds, be it a torrent downloader, dedicated downloader, or internet surfing.

Here, we present five excellent command-line Internet tools that are highly useful and prove to be very handy for downloading files and browsing internet in a Linux terminal.

1. rTorrent – Text-based BitTorrent Client

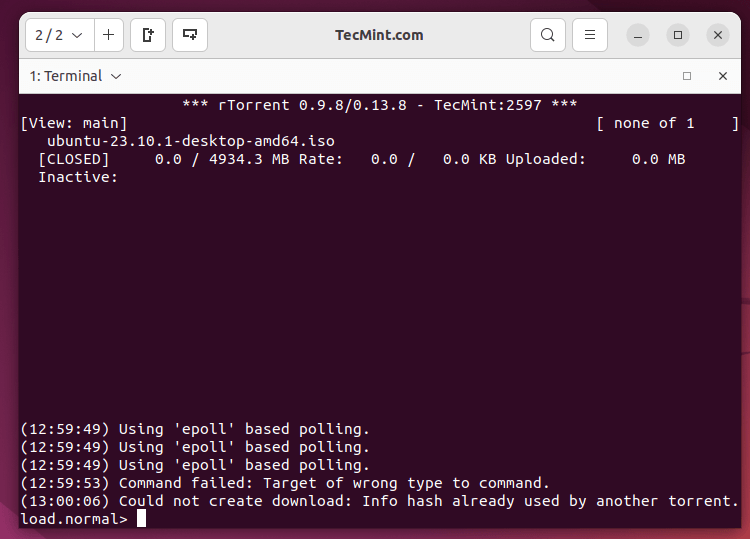

rTorrent is a text-based BitTorrent client written in C++, designed for high performance. It is available for most standard Linux distributions, including FreeBSD and Mac OS X.

Install rTorrent on Linux

To install rTorrent on Linux, use the following appropriate command for your specific Linux distribution.

sudo apt install rtorrent [On Debian, Ubuntu and Mint] sudo yum install rtorrent [On RHEL/CentOS/Fedora and Rocky/AlmaLinux] sudo emerge -a sys-apps/rtorrent [On Gentoo Linux] sudo apk add rtorrent [On Alpine Linux] sudo pacman -S rtorrent [On Arch Linux] sudo zypper install rtorrent [On OpenSUSE]

Check if rtorrent is installed correctly by running the following command in the terminal.

$ rtorrent

Here are some useful rTorrent keybindings and their respective uses.

- CTRL+ q – Quit rTorrent Application

- CTRL+ s – Start Download

- CTRL+ d – Stop an active Download or Remove an already stopped Download.

- CTRL+ k – Stop and Close an active Download.

- CTRL+ r – Hash Check a torrent before Upload/Download Begins.

- CTRL+ q – When this key combination is executed twice, rTorrent shuts down without sending a stop Signal.

- Left Arrow Key – Redirect to the Previous screen.

- Right Arrow Key – Redirect to Next Screen

2. Wget – Command Line File Downloader

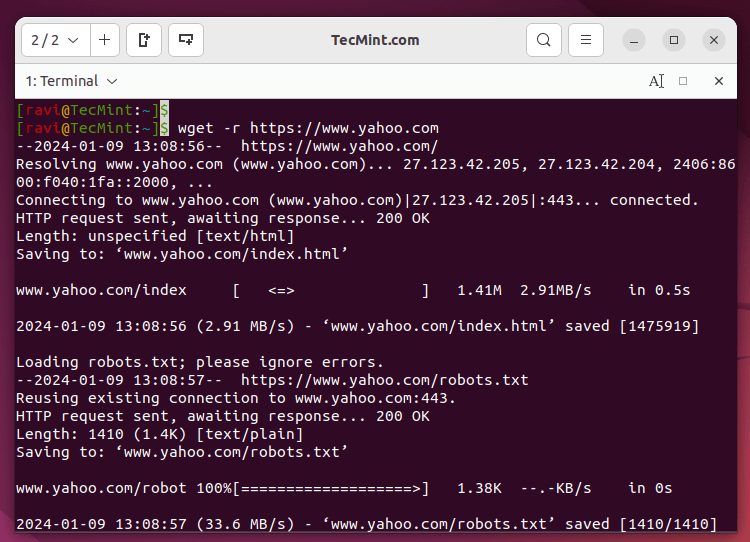

Wget is a part of the GNU Project, the name is derived from the World Wide Web (WWW). Wget is a brilliant tool that is useful for recursive download, and offline viewing of HTML from a local Server and is available for most platforms be it Windows, Mac, or Linux.

Wget makes it possible to download files over HTTP, HTTPS, and FTP. Moreover, it can be useful in mirroring the whole website as well as support for proxy browsing, and pausing/resuming Downloads.

Install Wget in Linux

Wget being a GNU project comes bundled with Most of the Standard Linux Distributions and there is no need to download and install it separately. If in case, it’s not installed by default, you can still install it using apt, yum, or dnf.

sudo apt install wget [On Debian, Ubuntu and Mint] sudo yum install wget [On RHEL/CentOS/Fedora and Rocky/AlmaLinux] sudo emerge -a sys-apps/wget [On Gentoo Linux] sudo apk add wget [On Alpine Linux] sudo pacman -S wget [On Arch Linux] sudo zypper install wget [On OpenSUSE]

Basic Usage of Wget Command

Download a single file using wget.

wget http://www.website-name.com/file

Download a whole website, recursively.

wget -r http://www.website-name.com

Download specific types of files (say PDF and png) from a website.

wget -r -A png,pdf http://www.website-name.com

Wget is a wonderful tool that enables custom and filtered downloads even on a limited resource machine. A screenshot of wget download, where we are mirroring a website (yahoo.com).

3. cURL – Command-Line Data Transfers

cURL is a command-line tool for transferring data over a number of protocols. cURL is a client-side application that supports protocols like FTP, HTTP, FTPS, TFTP, TELNET, IMAP, POP3, etc.

cURL is a simple downloader that is different from wget in supporting LDAP, and POP3 as compared to others. Moreover, Proxy Downloading, pausing download, and resuming download are well supported in cURL.

Install cURL in Linux

By default, cURL is available in most of the distributions either in the repository or installed. if it’s not installed, just do an apt or yum to get the required package from the repository.

sudo apt install curl [On Debian, Ubuntu and Mint] sudo yum install curl [On RHEL/CentOS/Fedora and Rocky/AlmaLinux] sudo emerge -a sys-apps/curl [On Gentoo Linux] sudo apk add curl [On Alpine Linux] sudo pacman -S curl [On Arch Linux] sudo zypper install curl [On OpenSUSE]

Basic Usage of cURL Command

To download a file from the specified URL and save it with the same name as the remote file.

curl -O https://example.com/file.zip

To download multiple files concurrently in a single command.

curl -O URL1 -O URL2 -O URL3

To limit the download speed to 500 kilobytes per second.

curl --limit-rate 500k -O https://example.com/largefile.zip

To download a file from an FTP server using specified credentials.

curl -u username:password -O ftp://ftp.example.com/file.tar.gz

To display only the HTTP headers of a URL.

curl -I https://example.com

4. w3m – Text-based Web Browser

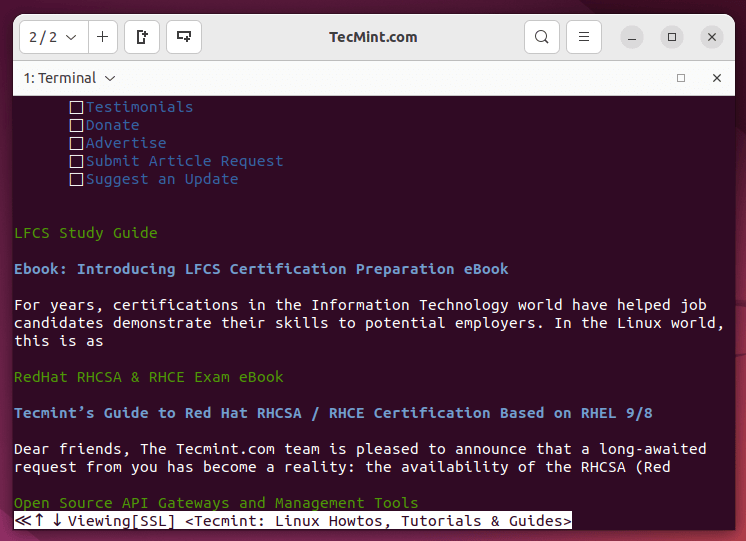

The w3m is a text-based web browser released under GPL that allows users to browse the internet within a terminal or command-line interface. It renders web pages in plain text, providing a lightweight and efficient way to access web content without the need for a graphical user interface.

Install w3m in Linux

Again w3m is available by default in most of the Linux distributions. If in case, it is not available you can always apt or yum the required package.

sudo apt install w3m [On Debian, Ubuntu and Mint] sudo yum install w3m [On RHEL/CentOS/Fedora and Rocky/AlmaLinux] sudo emerge -a sys-apps/w3m [On Gentoo Linux] sudo apk add w3m [On Alpine Linux] sudo pacman -S w3m [On Arch Linux] sudo zypper install w3m [On OpenSUSE]

To browse a website using w3m from the terminal, you can use the following command.

w3m www.tecmint.com

5. Elinks – Text-based Web Browser

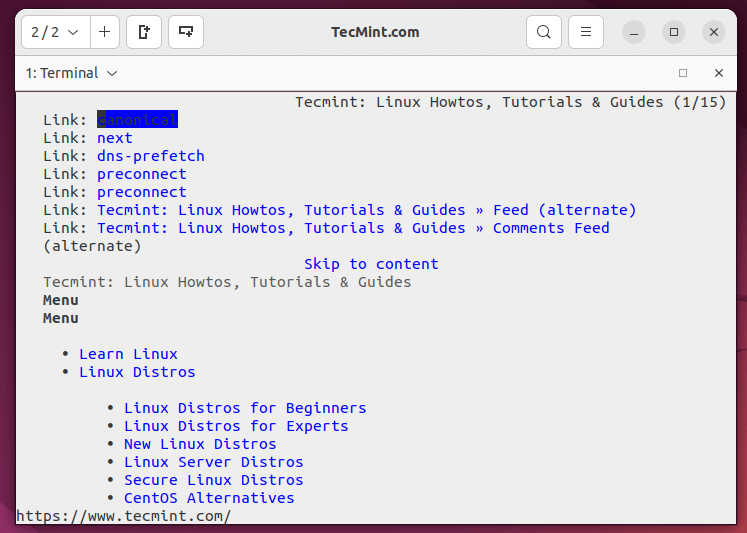

Elinks is a free text-based web browser for Unix and Unix-based systems. Elinks support HTTP, and HTTP Cookies and also support browsing scripts in Perl and Ruby.

Tab-based browsing is well-supported. The best thing is that it supports Mouse, and Display Colours, and supports several protocols like HTTP, FTP, SMB, Ipv4, and Ipv6.

Install Elinks in Linux

By default elinks are also available in most Linux distributions. If not, install it via apt or yum.

sudo apt install elinks [On Debian, Ubuntu and Mint] sudo yum install elinks [On RHEL/CentOS/Fedora and Rocky/AlmaLinux] sudo emerge -a sys-apps/elinks [On Gentoo Linux] sudo apk add elinks [On Alpine Linux] sudo pacman -S elinks [On Arch Linux] sudo zypper install elinks [On OpenSUSE]

To browse a website using Elinks from the terminal, you can use the following command.

elinks www.tecmint.com

Conclusion

That’s all for now. I’ll return with another captivating article that I’m sure you’ll enjoy reading. Until then, stay tuned and connected to Tecmint. Don’t forget to share your valuable feedback in the comment section.

i have a script on my server. i am on that server and i want to get a copy of that file to my local desktop. Please help me with some command that can help to do download the file from server to local machine.

Thanks in advance.

@Rajoski,

You should use rsync or scp command to get the file from remote server to local, please go through these articles on how to do it..

https://www.tecmint.com/scp-commands-examples/

https://www.tecmint.com/rsync-local-remote-file-synchronization-commands/

Hi

I am trying to download a zip file from browser, it showing the message like “0curl: (7) Failed to connect to 10.10.10.10 port 80: No route to host”.

Any idea to resolve the issue. Thanks in advance.

@Pathi,

It seems the remote server firewall is blocking, have you opened port 80 on the destination server that you’re trying to connect..

Wget doesn’t really work that well. Most sites block it and attempting to bypass that by adding a user-agent doesn’t really trick the site.

Wget does work very well if you spoof the user-agent string and make the target website ‘think’ that you are just a user, and not wget.

much better and feature-full than wget, curl or elinks for the ftp protocol, lftp is great for interactive as well as scripting

http://lftp.yar.ru/features.html

Yeah! Thanks for the feedback.

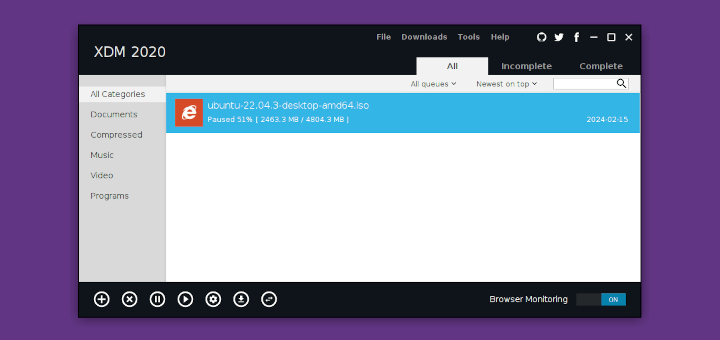

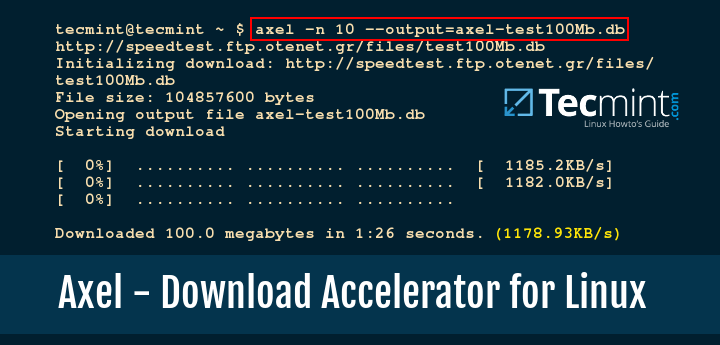

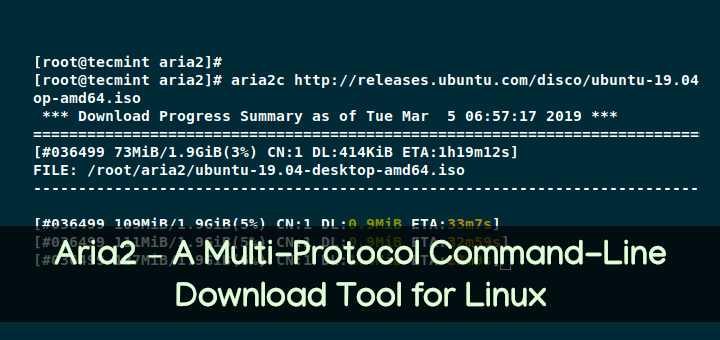

I think youtube-dl and axel would’ve been worth mentioning.

@ Abhilash, we will try to cover your suggestions in next part of this article.

Thanks for Elinks ;)

:)

how to using rtorrent with magic link ?

What about:

fetch, links and lynx ?

As stated above,

we would be including your suggestions while updating this post.

Great Articles. wget is powerful.

Thanks @ April.

Axel and aria2c are great too

Taken into account @ bejo, we will be updating this post with your suggestions.

What about Lynx?

Yeah we will update this article with your suggestions.